iRedMail

// Document Index

iRedMail

// Document IndexAttention

Check out the lightweight on-premises email archiving software developed by iRedMail team: Spider Email Archiver.

Warning

This tutorial was contributed by Setyo Prayitno <jrsetyo _at_ gmail.com>

(forum user name t10) on March 13, 2016.

Thanks Setyo. :) iRedMail Team doesn't offer tech support for this setup.

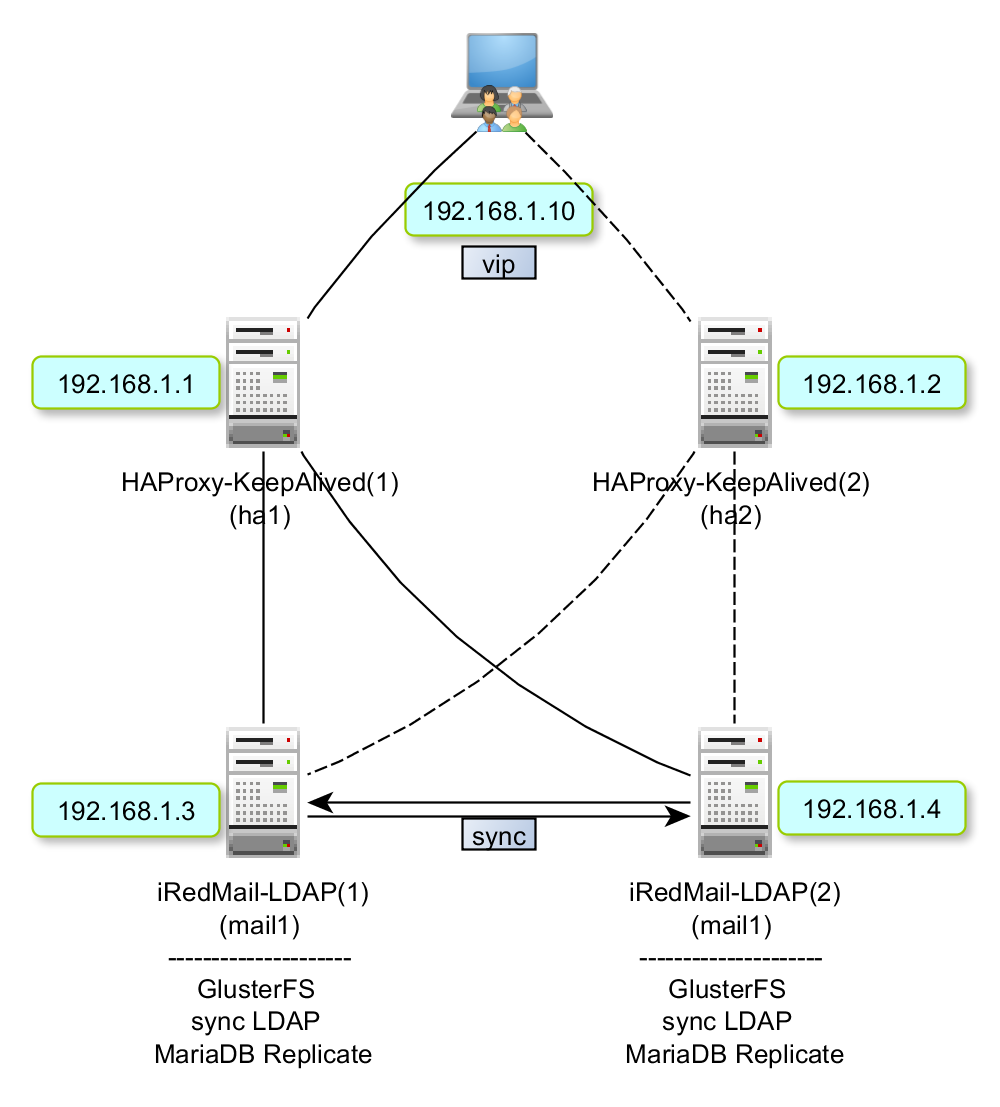

Build a fail-over cluster with 4 servers (2 backend servers behind HAProxy + KeepAlived).

example.com as mail domain name in this document.4 servers, all are CentOS 7.

The big picture:

Hostnames and IP addresses:

We use hostname ha1.example.com and ha2.example.com for our 2 servers

which run HAProxy and KeepAlived, use ha1 and ha2 for short.

We use hostname mail1.example.com and mail2.example.com for our 2 servers

which run iRedMail for mail services, use mail1 and mail2 for short.

IP addresses:

192.168.1.1 ha1

192.168.1.2 ha2

192.168.1.3 mail1

192.168.1.4 mail2

The procedure:

Install on 2 servers (ha1 & ha2)

/etc/hosts:192.168.1.1 ha1

192.168.1.2 ha2

192.168.1.3 mail1

192.168.1.4 mail2

yum install -y keepalived

mv /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf_DEFAULT

nano /etc/keepalived/keepalived.conf

vrrp_script chk_haproxy {

script "killall -0 haproxy" # check the haproxy process

interval 2 # every 2 seconds

weight 2 # add 2 points if OK

}

vrrp_instance VI_1 {

interface eth0 # interface to monitor

state MASTER # MASTER on ha1, BACKUP on ha2

virtual_router_id 51

priority 101 # 101 on ha1, 100 on ha2

virtual_ipaddress {

192.168.1.10 # virtual ip address

}

track_script {

chk_haproxy

}

}

/etc/keepalived/keepalived.confchange eth0 to your existing interface

vrrp_script chk_haproxy {

script "killall -0 haproxy" # check the haproxy process

interval 2 # every 2 seconds

weight 2 # add 2 points if OK

}

vrrp_instance VI_1 {

interface eth0 # interface to monitor

state BACKUP # MASTER on ha1, BACKUP on ha2

virtual_router_id 51

priority 101 # 101 on ha1, 100 on ha2

virtual_ipaddress {

192.168.1.10 # virtual ip address

}

track_script {

chk_haproxy

}

}

systemctl enable keepalived

systemctl start keepalived

ip a

yum install -y haproxy

mv /etc/haproxy/haproxy.cfg /etc/haproxy/haproxy.cfg_DEFAULT

/etc/haproxy/haproxy.cfgglobal

log 127.0.0.1 local0

log 127.0.0.1 local1 debug

maxconn 45000 # Total Max Connections.

daemon

nbproc 1 # Number of processing cores.

defaults

timeout server 86400000

timeout connect 86400000

timeout client 86400000

timeout queue 1000s

# [HTTP Site Configuration]

listen http_web 192.168.1.10:80

bind *:80

bind *:443 ssl crt /etc/ssl/iredmail.org/iredmail.org.pem

redirect scheme https if !{ ssl_fc }

mode http

balance roundrobin # Load Balancing algorithm

option httpchk

option forwardfor

server mail1 192.168.1.3:443 weight 1 maxconn 512 check

server mail2 192.168.1.4:443 weight 1 maxconn 512 check

# [HTTPS Site Configuration]

listen https_web 192.168.1.10:443

mode tcp

balance source# Load Balancing algorithm

reqadd X-Forwarded-Proto:\ http

server mail1 192.168.1.3:443 weight 1 maxconn 512 check

server mail2 192.168.1.4:443 weight 1 maxconn 512 check

# Reporting

listen stats

bind :9000

mode http

# Enable statistics

stats enable

# Hide HAPRoxy version, a necessity for any public-facing site

stats hide-version

# Show text in authentication popup

stats realm Authorization

# URI of the stats page: localhost:9000/haproxy_stats

stats uri /haproxy_stats

# Set a username and password

stats auth yourUsername:yourPassword

/etc/haproxy/haproxy.cfgglobal

log 127.0.0.1 local0

log 127.0.0.1 local1 debug

maxconn 45000 # Total Max Connections.

daemon

nbproc 1 # Number of processing cores.

defaults

timeout server 86400000

timeout connect 86400000

timeout client 86400000

timeout queue 1000s

# [HTTP Site Configuration]

listen http_web 192.168.1.10:80

bind *:80

bind *:443 ssl crt /etc/ssl/iredmail.org/iredmail.org.pem

redirect scheme https if !{ ssl_fc }

mode http

balance roundrobin # Load Balancing algorithm

option httpchk

option forwardfor

server mail1 192.168.1.3:80 weight 1 maxconn 512 check

server mail2 192.168.1.4:80 weight 1 maxconn 512 check

# [HTTPS Site Configuration]

listen https_web 192.168.1.10:443

mode tcp

balance source# Load Balancing algorithm

reqadd X-Forwarded-Proto:\ http

server mail1 192.168.1.3:443 weight 1 maxconn 512 check

server mail2 192.168.1.4:443 weight 1 maxconn 512 check

# Reporting

listen stats

bind :9000

mode http

# Enable statistics

stats enable

# Hide HAPRoxy version, a necessity for any public-facing site

stats hide-version

# Show text in authentication popup

stats realm Authorization

# URI of the stats page: localhost:9000/haproxy_stats

stats uri /haproxy_stats

# Set a username and password

stats auth yourUsername:yourPassword

create cert for ssl redirect (to iRedMail Servers)

mkdir /etc/ssl/iredmail.org/

openssl genrsa -out /etc/ssl/iredmail.org/iredmail.org.key 2048

openssl req -new -key /etc/ssl/iredmail.org/iredmail.org.key -out /etc/ssl/iredmail.org/iredmail.org.csr

openssl x509 -req -days 365 -in /etc/ssl/iredmail.org/iredmail.org.csr -signkey /etc/ssl/iredmail.org/iredmail.org.key -out /etc/ssl/iredmail.org/iredmail.org.crt

cat /etc/ssl/iredmail.org/iredmail.org.crt /etc/ssl/iredmail.org/iredmail.org.key > /etc/ssl/iredmail.org/iredmail.org.pem

activate HAProxy service

systemctl enable haproxy

systemctl start haproxy

check log if any errors

tail -f /var/log/messages

allow http, https, haproxystat ports

firewall-cmd --zone=public --permanent --add-port=80/tcp

firewall-cmd --zone=public --permanent --add-port=443/tcp

firewall-cmd --zone=public --permanent --add-port=9000/tcp

firewall-cmd --complete-reload

first, add new hard drive with the same capacity

/etc/hosts:192.168.1.3 mail1

192.168.1.4 mail2

mail1:type 'n', and hit enter for next question, (don't forget to write) hit 'w'

fdisk /dev/sdb

/sbin/mkfs.ext4 /dev/sdb1

mkdir /glusterfs1

Update /etc/fstab:

/dev/sdb1 /glusterfs1 ext4 defaults 1 2

remount all:

mount -a

type 'n', and hit enter for next question, (don't forget to write) hit 'w'

fdisk /dev/sdb

/sbin/mkfs.ext4 /dev/sdb1

mkdir /glusterfs2

Update /etc/fstab:

/dev/sdb1 /glusterfs2 ext4 defaults 1 2

remount all

mount -a

yum -y install epel-release

yum -y install centos-release-gluster38.noarch

yum -y install glusterfs glusterfs-fuse glusterfs-server

activate the service

systemctl enable glusterd.service

systemctl start glusterd.service

disabling firewall

systemctl stop firewalld.service

systemctl disable firewalld.service

gluster peer probe mail2

gluster peer probe mail1

you can check status with command below:

gluster peer status

gluster volume create mailrep-volume replica 2 mail1:/glusterfs1/vmail mail2:/glusterfs2/vmail force

gluster volume start mailrep-volume

check it

gluster volume info mailrep-volume

on mail1:

mkdir /var/vmail

mount.glusterfs mail1:/mailrep-volume /var/vmail/

Update /etc/fstab

mail1:/mailrep-volume /var/vmail glusterfs defaults,_netdev 0 0

remount all

mount -a

check it

df -h

mkdir /var/vmail

mount.glusterfs mail2:/mailrep-volume /var/vmail/

Update /etc/fstab:

mail2:/mailrep-volume /var/vmail glusterfs defaults,_netdev 0 0

remount all

mount -a

check it

df -h

you can test it by creating some files on one of your mail servers

cd /var/vmail; touch R1 R2 R3 R4 R5 R6

make sure it syncs, by checking files on both servers

ls -la /var/vmail

Install the latest iRedMail on 2 servers (mail1 & mail2)

For installing iRedMail on CentOS, please check its installation guide: Install iRedMail on Red Hat Enterprise Linux, CentOS

Note

install iRedMail on mail1 first, after mail1 finish you can install it

to mail2 (it's better to not reboot after installing iRedMail, wait until it

finishes installation/configuration)

Don't forget to choose LDAP and using default mail folder: /var/vmail

/etc/openldap/slapd.conf:moduleload syncprov

index entryCSN,entryUUID eq

overlay syncprov

syncprov-checkpoint 100 10

syncprov-sessionlog 200

/etc/openldap/slapd.conf:Attention

You can find password of bind dn cn=vmail,dc=xx,dc=xx in Postfix LDAP

query files under /etc/postfix/ldap/.

syncrepl rid=001

provider=ldap://mail1:389

searchbase="dc=iredmail,dc=kom"

bindmethod=simple

binddn="cn=vmail,dc=iredmail,dc=kom"

credentials=erec3xiThBUW9QnnU9Bnifp3434

schemachecking=on

type=refreshOnly

retry="60 +"

scope=sub

interval=00:00:01:00

attrs="*,+"

on both servers set firewalld to accept gluster port, ldap port, and database to each servers, or you can set your own rules:

firewall-cmd --permanent \

--zone=iredmail \

--add-rich-rule='rule family="ipv4" source address="192.168.1.3/24" port protocol="tcp" port="389" accept'

firewall-cmd --permanent \

--zone=iredmail \

--add-rich-rule='rule family="ipv4" source address="192.168.1.4/24" port protocol="tcp" port="3306" accept'

firewall-cmd --zone=iredmail --permanent --add-port=111/udp

firewall-cmd --zone=iredmail --permanent --add-port=24007/tcp

firewall-cmd --zone=iredmail --permanent --add-port=24008/tcp

firewall-cmd --zone=iredmail --permanent --add-port=24009/tcp

firewall-cmd --zone=iredmail --permanent --add-port=139/tcp

firewall-cmd --zone=iredmail --permanent --add-port=445/tcp

firewall-cmd --zone=iredmail --permanent --add-port=965/tcp

firewall-cmd --zone=iredmail --permanent --add-port=2049/tcp

firewall-cmd --zone=iredmail --permanent --add-port=38465-38469/tcp

firewall-cmd --zone=iredmail --permanent --add-port=631/tcp

firewall-cmd --zone=iredmail --permanent --add-port=963/tcp

firewall-cmd --zone=iredmail --permanent --add-port=49152-49251/tcp

reload firewall rules:

firewall-cmd --complete-reload

Restart OpenLDAP service:

systemctl restart slapd

/etc/my.cnf:server-id = 1

log_bin = /var/log/mariadb/mariadb-bin.log

log-slave-updates

log-bin-index = /var/log/mariadb/log-bin.index

log-error = /var/log/mariadb/error.log

relay-log = /var/log/mariadb/relay.log

relay-log-info-file = /var/log/mariadb/relay-log.info

relay-log-index = /var/log/mariadb/relay-log.index

auto_increment_increment = 10

auto_increment_offset = 1

binlog_do_db = amavisd

binlog_do_db = iredadmin

binlog_do_db = roundcubemail

binlog_do_db = sogo

binlog-ignore-db=test

binlog-ignore-db=information_schema

binlog-ignore-db=mysql

binlog-ignore-db=iredapd

log-slave-updates

replicate-ignore-db=test

replicate-ignore-db=information_schema

replicate-ignore-db=mysql

replicate-ignore-db=iredapd

Restart MariaDB service:

systemctl restart mariadb

*on mail2, update /etc/my.cnf:

server-id = 2

log_bin = /var/log/mariadb/mariadb-bin.log

log-slave-updates

log-bin-index = /var/log/mariadb/log-bin.index

log-error = /var/log/mariadb/error.log

relay-log = /var/log/mariadb/relay.log

relay-log-info-file = /var/log/mariadb/relay-log.info

relay-log-index = /var/log/mariadb/relay-log.index

auto_increment_increment = 10

auto_increment_offset = 1

binlog_do_db = amavisd

binlog_do_db = iredadmin

binlog_do_db = roundcubemail

binlog_do_db = sogo

binlog-ignore-db=test

binlog-ignore-db=information_schema

binlog-ignore-db=mysql

binlog-ignore-db=iredapd

log-slave-updates

replicate-ignore-db=test

replicate-ignore-db=information_schema

replicate-ignore-db=mysql

replicate-ignore-db=iredapd

Restart MariaDB service:

systemctl restart mariadb

create user 'replicator'@'%' identified by '12345678';

grant replication slave on *.* to 'replicator'@'%';

SHOW MASTER STATUS;

+--------------------+----------+----------------------------------------------+-------------------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB |

+--------------------+----------+----------------------------------------------+-------------------------------+

| mariadb-bin.000001 | 245 | amavisd,iredadmin,iredapd,roundcubemail,sogo | test,information_schema,mysql |

+--------------------+----------+----------------------------------------------+-------------------------------+

check master status in column File and Position:

create user 'replicator'@'%' identified by '12345678';

grant replication slave on *.* to 'replicator'@'%';

slave stop;

CHANGE MASTER TO MASTER_HOST = '192.168.1.3', MASTER_USER = 'replicator', MASTER_PASSWORD = '12345678', MASTER_LOG_FILE = 'mariadb-bin.000001', MASTER_LOG_POS = 245;

slave start;

SHOW MASTER STATUS;

+--------------------+----------+----------------------------------------------+-------------------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB |

+--------------------+----------+----------------------------------------------+-------------------------------+

| mariadb-bin.000001 | 289 | amavisd,iredadmin,iredapd,roundcubemail,sogo | test,information_schema,mysql |

+--------------------+----------+----------------------------------------------+-------------------------------+

show slave status\G;

File, MASTER_LOG_POS is from Position of master mail1File and PositionRestart MariaDB service:

systemctl restart mariadb

slave stop;

CHANGE MASTER TO MASTER_HOST = '192.168.1.4', MASTER_USER = 'replicator', MASTER_PASSWORD = '12345678', MASTER_LOG_FILE = 'mariadb-bin.000001', MASTER_LOG_POS = 289;

slave start;

show slave status\G;

exit;

File, MASTER_LOG_POS is from Position of master mail2*.Restart MariaDB service:

systemctl restart mariadb

To view the DB easily, you may want to install adminer from http://adminer.org/ (it's a web-based SQL management tool, just a single PHP file):